“We’ve learned the hard way that between the word ‘first’ and the word ‘computer’ there are about 19 adjectives”

— John Hollar, Former CEO of the Computer History Museum

The title of this essay is a simple question. One would think it would have a simple answer, but that doesn’t seem to be the case. There are some who would say quite strongly that it is this person or that, but there is little agreement as to which person to give the credit. (The spreadsheet listed at the end of this article includes claims from several contenders’ Wikipedia articles. These claims are remarkably similar, although each is slightly different, and if read carefully, each is probably true.)

Why is it that there is no consensus on such a recent event? After all, if I ask, “Who invented the telephone?” or “Who invented the telegraph?” almost everyone knows the answers: Alexander Graham Bell and Samuel F. B. Morse. (As we shall see, even these answers aren’t as clearly correct as we might suppose.)

The computer was arguably invented within the last 100 years. It shouldn’t be that hard to know the answer. I’m not asking “who invented the wheel?” after all.

I propose to analyze some of the reasons why this question is hard and take a stab at my own answer to the question posed in the title.

The First Contender

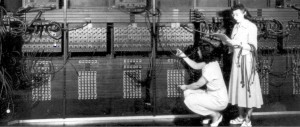

I grew up believing that the first computer was the ENIAC built in the 1940’s and invented by John W. Mauchly and J. Presper Eckert, Jr. [1] They even filed a patent on their invention. There are two problems here. First, their patent was eventually overthrown in court because (according to the court findings) they had taken ideas from an earlier design. [2] I would argue that I am asking a historical question not a legal one. So court decisions, while possibly relevant, are not definitive.

The second, more serious complaint (at least in my own mind), about the ENIAC as first computer is that the ENIAC is not stored-program. That is to say the ENIAC was not “programmed” by storing instructions in memory. Rather the ENIAC was “programmed” by setting a series of dials and switches and plugging in wires somewhat like a telephone switchboard. Later it did adopt the idea of encoding instructions in numeric form, but by then it was no longer the first.

All modern computers from your Apple Watch to the giant racks of servers at Amazon, Google, Facebook, etc. are stored-program computers. They execute a series of instructions that are stored in memory, generally the same memory that stores the data being processed.

So I say, the ENIAC, while a precursor to the modern computer, is not itself a full-fledged computer, in the modern sense of the word.

The Oldest Stored-Program Computing Device

To find the first stored-program device we actually must go back much earlier to the Analytical Engine, designed by Charles Babbage ca. 1840. [3] This device’s program was contained in a set of punched cards, which can be considered a form of storage.

The three problems with the Analytical engine’s claim to primacy are (1) It is not electronic or even electro-mechanical. It is entirely mechanical. (2) It was never actually built. And (3) the program is stored in punched cards and not in the engine’s “store”. (True, early commercial computers used punched cards for programs as well as data. But they were input media and were read into memory before they were executed. The memory itself was reusable; the punched cards (whether Babbage’s or IBM’s) were not.

The von Neumann Architecture

John von Neumann wrote a paper in 1945, entitled “First Draft of a Report on the EDVAC” [4] that included the idea of storing computer instructions in memory. It is largely due to that paper and its concept of storing the instructions in memory, that modern computers are often referred to as “von Neumann machines”. But that, too, is controversial because others, including Mauchly and Eckert had raised that possibility and were collaborating with von Neumann on the “First Draft” mentioned above.

I must add that the “First Draft” is a remarkable paper that described and argued for many aspects of computer design, not only the use of memory to store the program. In this paper he also argued for the use of binary arithmetic, and the necessity of using an all-electronic (as opposed to electro-mechanical) design in order to achieve reasonable speed of computation.

Define Your Terms

As can be seen from the above examples, in order to determine who created the first computer, we have to have a common definition of “computer”.

I would argue that the modern computer has all of the following characteristics:

- General purpose: It can solve a variety of types of problems by being “programmed” i.e. given a set of instructions that represent the procedure for solving the problem at hand. Moreover, the user of the computer can choose which programs to install, and which programs to run. This is to distinguish from an embedded device, which has the other characteristics described below, but is used for a specific purpose.

- Stored program: The set of instructions is encoded in a numeric form and stored in memory along with the data that is being used to solve the problem.

- Electronic: It is made from electronic elements: integrated circuits, transistors, or even vacuum tubes. It is not electro-mechanical, i.e. using relays, nor mechanical (such as Babbage’s analytical engine, or even the abacus).

Of course a computer has other characteristics, but I propose to use the above three as the defining characteristics of a “computer”.

I recognize that some historians and computer scientists would disagree with one or more of the above criteria, but they are the ones I’m using. I submit that all modern usage of the term “computer” at least implies all three of these qualities.

By applying the above three criteria we can eliminate the candidates already mentioned: the Analytical Engine because it was mechanical, and the ENIAC because it was not, at least initially, stored program.

But does this definition resolve the question? Unfortunately, not to everyone’s satisfaction.

Who Came First?

In the 1940s and 1950s many researchers were working on the problems of automatic computation. These researchers include some of the giants of computer science including not only John von Neumann (mentioned above) but also Alan Turing, and Claude Shannon. With so many people working on the problem (and generally collaborating with one another) it’s not surprising that the same ideas (such as stored program) arose in the writings of several people at more or less the same time.

It is sometime maddening to try to determine which one deserves the “original” credit. I recently read a book that says the EDSAC computer was the first computer to use the stored-program idea. But another article says it was the second–without naming the first.

Perhaps this whole question is an exercise in historical nit picking. Many ideas arose at about the same time from multiple individuals. Does it really matter whether the idea of stored-program computers came from von Neumann, or Eckhart and Mauchly, or Turing, all of whom published papers about stored-program computers in the mid 1940s, or even Konrad Zuse who filed two patents on stored-program computers in 1936–ten years earlier than the “first draft” paper that has caused von Neumann’s name to be associated so strongly with stored-program computers?

Of course it can matter financially, as patent holders can become rich and famous, while also-rans to the patent office are soon forgotten. I argued above that such things are legal rather than historical arguments.

Yet, the outcome of such battles can determine the narrative that becomes history. At the beginning of this essay I posed the questions of who invented the telephone and the telegraph. The reason we say that Bell invented the telephone is that he made it to the patent office first. But there is significant evidence that his ideas were preceded by Antonio Meuchi. [5] And the Morse’s telegraph was preceded by several versions invented in England. [6]

Reduction to Practice

The phrase, “reduction to practice” is a legal term used in patent law. It means turning an idea into a working machine (or process, etc.) Under U.S. patent law it is not necessary to reduce an idea to practice in order to be granted a patent. In history, I think it fair to give credit to both the originator(s) of an idea as well as those who implement it.

As an example, the idea of storing the program in memory, can go to von Neuman in his “First Draft” paper. Even if it is disputed that von Neumann deserves full credit for the stored-program idea, is indisputable that his paper led a number of groups to start working on implementing ideas found in that paper. The EDVAC computer itself was the specific subject of von Neumann’s paper. But its implementation is preceded by the EDSAC. And that is preceded by the Manchester Small-Scale Experimental Machine, nicknamed “Baby”. [7] Both the Baby and the EDSAC were directly inspired by von Neumann’s paper, and they both include the stored-program concept.

And the Winner is…

So to determine who invented the computer we must deal with primacy, ideas vs. implementation, and the precise definition of “computer.”

Of all the components of my proposed definition of “computer”, I argue that the “stored program” idea–the concept of storing the computer’s instructions in the computer’s memory is the most important. It is a unique feature among modern inventions, and makes the computer uniquely powerful. Just look at the number and variety of “apps” on your smart phone to get a sense of the flexibility that the stored-program idea empowers. You can switch your computer-in-your-pocket from making a phone call, to showing you the weather, to playing a game, to reading a book, to watching a movie, by simply replacing the program (“app”) that you have stored into memory.

So who came up with the idea of storing computer instructions in memory? The general ideas was first mentioned not by John von Neumann, but by Konrad Zuse, ten years earlier, or even Charles Babbage, a hundred years earlier. But both Zuse’s and Babbage’s devices stored the program in non-erasable media separate from the the data “store” (to use Babbage’s term). In terms of storing instructions in the same kind of memory as the data, the credit does deserve to go to John von Neumann and his unnamed collaborators.

Of course, the stored-program concept is not the only component of a computer design. It is not possible to single out one inventor of these broader set of concepts because the ideas came from many people. I would give credit to at least the following set of individuals for contributing to the development of the first computer:

- Gottfried Leibniz – Gave structure to the idea of binary arithmetic [8]

- Charles Babbage – The first to design a programmable computing machine

- Ada Lovelace – The first to realize that a computing device (specifically Babbage’s Analytical Engine) could be used to solve any symbolic problem, not just numeric computations. [10]

- George Boole – Inventor of the logical algebra that now bears his name and is a fundamental component of all computer hardware and software [9]

- Alan Turing – Formalized the concepts of an ideal computing machine.

- John von Neumann – Wrote the “First Draft” describing the design of a computer. [4]

- Claude Shannon – Proposed in his masters thesis that computers can be designed from components that follow Boolean Algebra.

- Konrad Zuse – Designed and built a number of early electro-mechanical computers

- John Atanasoff – Created early electronic computing devices. Now credited with many of the ideas that went into the ENIAC.

As for implementation there is actually little dispute that the Manchester Small-Scale Experimental Machine, the “Baby” computer, is the first stored-program general-purpose electronic computer. Thus I give the credit for implementing the first computer to Frederic C. Williams, Tom Kilburn and Geoff Tootill, the men who built the “Baby”.

See this spreadsheet for a list of the devices mentioned in this article and how they measure up to the definition I have given here.

References

[1] ENIAC: Celebrating Penn Engineering History, https://www.seas.upenn.edu/about-seas/eniac/mauchly-eckert.php

[2] Judge declares the ENIAC patent invalid, October 19, 1973, http://www.edn.com/electronics-blogs/edn-moments/4398948/Judge-declares-the-ENIAC-patent-invalid–October-19–1973-

[3] The Babbage Engine, http://www.computerhistory.org/babbage/engines/

[4] First Draft of a Report on the EDVAC by John von Neumann https://web.archive.org/web/20130314123032/http://qss.stanford.edu/~godfrey/vonNeumann/vnedvac.pdf

[5] Antonio Meucci http://www.famousscientists.org/antonio-meucci/

[6] The History of the Electric Telegraph and Telegraphy, http://inventors.about.com/od/tstartinventions/a/telegraph.htm

[7] “Manchester Small-Scale Experimental Machine”, Wikipedia, https://en.wikipedia.org/wiki/Manchester_Small-Scale_Experimental_Machine#cite_note-1

[8] “Explanation of Binary Arithmetic” in Memoires de l’Academie Royale des Sciences by Gotried Leibniz , http://www.leibniz-translations.com/binary.htm

[9] “The Calculus of Logic” in Cambridge and Dublin Mathematical Journal, Vol. III (1848), pp. 183-98 by George Boole, http://www.maths.tcd.ie/pub/HistMath/People/Boole/CalcLogic/CalcLogic.html

[10] “A Selection and Adaptation From Ada’s Notes found in “Ada, The Enchantress of Numbers,” by Betty Alexandra Toole Ed.D. (Strawberry Press, Mill Valley, CA)”, http://www.agnesscott.edu/lriddle/women/ada-love.htm

[11] Eniac in Action: Making and Remaking the Modern Computer by Thomas Haugh, Mark Priestly, and Crispin Rope. (MIT Press, Cambridge, Massachusetts, and London, England, 2016) (https://www.amazon.com/ENIAC-Action-Remaking-Computer-Computing-ebook/dp/B08BSZ9Y1T/ref=sr_1_1?dchild=1&keywords=ENIAC+in+Action&qid=1617325914&sr=8-1) Note: References (4], [8], [9], and [10] are all original writings by the creators of the ideas they describe. I particularly recommend von Neuumann’s “First Draft” [4].

I recently finished the book, It Began with Babbage the Genesis of Computer Science, by Subrata Dasgupta, https://smile.amazon.com/Began-Babbage-Genesis-Computer-Science-ebook/dp/B00H9FZ40A/ref=sr_1_1?s=digital-text&ie=UTF8&qid=1479520881&sr=1-1&keywords=It+began+with+Babbage in which the author argues that the “Baby” computer is *not* the first computer because it does not have any I/O (input/output) devices. I don’t include the requirement for I/O devices in my definition although it seems an implicitly necessary component of a computer else it could not take in programs or data nor output results. For that very reason, I disagree with the notion that the “Baby” has no I/O devices, else it would have been useless. I would say rather that it’s I/O is extremely primitive, but they do exist. A friend of mine likes to say, “Crawl, walk, run, fly”. The “baby” was clearly only crawling–no pun intended–in terms of processing power, and memory size, in addition to primitive I/O.

Although I disagree with Dasgupta’s conclusion on this point, I still highly recommend his book for anyone interested in this subject.

Today I attended a talk at the Computer History Museum by Thomas Haigh on the subject of Eniac. He pointed out that Eniac was converted to a stored-program computer. I had read that elsewhere as well (I believe in Dasgupta’s book mentioned in a previous comment). Haigh claims that this conversion occurred in March of 1948. According to the Wikipedia article listed in the references, the “Baby” computer ran it’s first program on June 21, 1948. That would make the Eniac pre-date the “Baby” by a couple of months.

However, the Wikipedia article on the Eniac claims that it ran it’s first stored-program ran on September 16, 1948. That would mean the “Baby” still pre-dates the Eniac’s conversion by a few months.

Haigh has just released a book, Eniac in Action: Making and Remaking the Modern Computer (See reference [11] at the end of the main article.) and I’m eager to read it. Haigh is a historian who (based on his lecture today) has a good understanding of the technology of which he writes. Also, as a historian, he seems careful in his research.

In Haigh’s talk today he had one slide that said that the Eniac is “Often called the first ‘Electronic, digital, general-purpose computer'” and is “a step on the path to the ‘first stored-program computer.'” [inner quotes in the power-point slide from which I quote.]

So, it seems that even by Haigh’s own admission, the “Baby” (which he never mentioned in his talk) edged out the upgraded, stored-program Eniac by a few months.

Haigh also disparaged, in his talk, the entire idea of naming “firsts”. He does have a point.

It is interesting to note that both the improvements to the Eniac as well as the development of the “baby” computer were directly derived from Von Neumann’s “First Draft” paper, also listed in the references above, and which I again highly recommend to read.

I’m now reading Haigh’s book, referenced above. In it he says: “[A]fter a year’s concerted effort [ENIAC] became, in April of 1948, the first computer to run a program written in the new code paradigm.”

Haigh stated at the beginning of the book that he wants it to be the definitive history of ENIAC. To that end it is heavily laced with footnote references, mostly to primary sources. Nonetheless there is no footnote for this claim.

Also note that the date differs by a month from the claim he made in the lecture. But whether the ENIAC began running stored programs in April as his book says, or March as he said in his lecture, it beats the “Baby” computer (which became operational in June)by a couple of months.

I was almost ready to give the “title” back to ENIAC, but then I realized that although the ENIAC was executing instructions using the kind of machine language we use today (op-code and operands, encoded numerically–what Haigh calls “the modern coding paradigm”) the instructions were, however, not stored in a read/write memory, but rather in a special human-settable, computere-readable storage device called “function tables”. So I’ll keep “Baby” as the “winner” and fall back to Haigh’s own comment that the ENIAC is “a step on the path to the ‘first stored-program computer.’”

Hello Glenn,

Glad you like the book, and the talk I gave last month.

Looking at your series of posts I think you may be starting to see why I, like most historians, find the whole “first computer” argument rather unhelpful. It depends entirely on what definition of computer you adopt. In the main post seem to define “first computer” as “first stored-program computer,” but someone who disagreed with that might ask why a computer that doesn’t have a stored program isn’t a computer? Logically speaking, aren’t you changing the question when you add those extra two words between “What was the first” and “computer”?

In your Nov 29 comment you seem to have decided that storing a program in RAM rather than ROM is an essential feature of any true computer. That’s certainly a point of view, but would you say that a device like an Atari VCS that ran code from ROM was not a computer? If so, would copying the ROM into RAM have made it one?

You also note Dasgupta decided that having significant IO capabilities is essential. von Neumann’s “first draft” did specify the existence of a machine writable external medium. Someone could make an argument that if “stored program computer” means a “computer as von Neumann described it” then an external storage medium is essential.

Anyway, all this is exactly why we don’t try to answer the “first computer” question, or even the “first stored program computer” question in our book. What we do attempt is to give a precise definition of the new kind of control system von Neumann described in the first draft, which we call the “modern code paradigm.” The question of which computer was the first to execute a program of this kind is answerable, unlike the other two questions. The answer is clearly ENIAC. Whether that makes it the “first stored program computer” is a question I’m happy to leave to others. As you note, it depends on whether you think a program stored in ROM is still a program.

If you are looking for specifics on the timing of the conversion you will find them on pages 163-164 so the citations are there rather than in the introduction. As the quotes from the log book on p. 164 show, Nick Metropolis started setting up the instruction set on March 29, got he “basic sequence and 2 or 3 orders working” the next day, and on the day after (March 31), ENIAC “was demonstrated for the first time using the new coding scheme.” That must have meant running a simple program of some kind, but we have no idea what it was.

On the other hand, we know exactly what the Monte Carlo program did and it was extremely complicated by the standards of the 1940s. The “first production run” with this code occurred on April 17.

So first proof of principle program run March 31, 1948. First useful production program, the Monte Carlo code, run April 17. In the lecture I was thinking of the former. In the book we’re a little more conservative and go with the latter. Both are clearly some time before June.

Wikipedia is simply wrong about the conversion date, and about may other ENIAC things. In this case it copies Herman Goldstine’s mistake. We discuss this error on page 244. The discussion on pages 243-246, in the section “ENIAC as a Stored Program Computer” is very relevant to your interests.

Best wishes,

Tom Haigh

Hi Tom,

Thank you so much for your reply. I did indeed enjoy your book and recommend it to anyone interested in the history of computing.

I have certainly come to see the difficulty of determining who made the first computer, and I do agree with John Hollar’s comment that I added to the start of my article about “which of about 19 adjectives you put between ‘first’ and ‘computer’,” a point you also made in your reply. If we don’t put some adjectives in, though, the question is meaningless. I know, I know, that’s exactly your point.

I think, maybe, what I have been trying to say is “computer as people commonly think of it today.” Even that is slippery. I recently asked my (adult) daughter if she considered a smart phone a computer. She said, “I know it is one but I don’t think of it that way.” (Is a smart phone a radio? Who invented the first radio? What *is* a radio?)

I certainly misstepped when I referred to read/write memory, as your counter-example pointed out. I somehow have trouble thinking of a collection of rotary switches as a “memory”. But then I have trouble thinking of a CRT as a memory device, but it certainly was.

So, maybe answering my own question *is* futile. But as the cliche says, it’s not the destination it’s the journey. I’m doing a lot of reading on the history of computing and finding it all interesting.

It is pretty funny, that you end up with the definition of first “computer as people commonly think of it today.” A “computer” was originally a person that “computes”; makes advanced calculations – and “compute” derives from latin “computare”, which simply means ““sum up, reckon, compute”. A computer as a machine is thus a machine, that can make calculation and execute operations faster than a human computer.

I wonder, why a general-purpose-computer must be electronic? The criteria simply eliminates all previous candidates, as electronics weren’t invented. The major benefit from electronics is minaturization and speed, but not a must for a “general purpose calculator” – as a computer is basically an advanced calculator doing a series of calculations and operations.

This criteria simply errases the very fact, that the development of the general-purpose-computer was principally continous refinement of Charles Babbages original concept, simply because it took 150 years to complete the original machine.

The principles was transferred to newer technology, while implementing the various advances achieved from mechanical calculators, telecommuncation, en-/decryptors and similar devices and industrial installations using the same elements, Babbage integrated into his machine.

It is funny because, if you actually wanted the “first general-purpose-computer” without any adjectives, the answer is indiscriminately Charles Babbage’s “Analytical machine”. The “program” was stored in a “random access memory” consisting of “Physical state of wheels in axes” – it had a primitive I/O-system – and the “central processing unit” consisted of a lot of intricate gears.

The only problem is, that the precision of the gears were un-obtainable from blacksmiths and clockmakers of his days – which is why it was only finished with help from cad-cam and cnc-machines a few years ago.

As it is stated in the wikipedia article: “If the Analytical Engine had been built, it would have been digital, programmable and Turing-complete.”

If it had been produced back then, it would probably have been refined as electromecanical by adding an electro-motor to speed up the process. And then we’d have seen the later refinement to more advanced electomechanical computers with Mechanical relays and finally the refinement to electronics with Vacuum Tube Triode Flip-Flops, Cathode Ray Tubes, Transistors and Integrated Circuits.

It is like claming, that a bicycle is a sensational of the mid 19th century. The technology used (wheel, chain/mechanical belt, pedal, gear, crankshaft etc.) had been refined for centuries and millennia. The wooden bicycle was designed by Leonardo Da Vinci, and Kirkpatrick Macmillan et al. simply integrated the existing technology into Baron Karl von Drais fifty year older “Dandy Horse”, again derived from preceding carriages.

Charles Babbage was a pioneer ahead of his time. And we simply had to await technological advances, before the concept could be finished. Just as Da Vinci never saw the bicycle, despite that his concept looks like a 1980s racing bike – but he still has to be credited as the designer. The same way, Chales Babbage and his “Analytical Machine” should never be underestimated.

Thanks for your comments.

First, let me say that my use of “electronic” in my definition is in no way meant to minimize the contribution or genius of Charles Babbage. My only reason in including “electronic” in my definition is that I do believe that is what most people think of in relation to the term “computer”.

To nitpick a couple of your points: The program storage on the Analytical Engine was not random access–it was sequential access.

I once was showing the reconstruction of a Babbage Difference Engine that was on display at the Computer History Museum in Mountain View, California, to a friend and explaining essentially what you said about “[t]he only problem is, that the precision of the gears were un-obtainable from blacksmiths and clockmakers of his days – which is why it was only finished with help from cad-cam and cnc-machines a few years ago.” A docent came up to me and told me that that is a common misconception about Babbage. The docent claimed that the only problems Babbage had were lack of funding from his sponsors and lack of focus on his part.

Your reference to “computer” as the designation of a human profession reminds me of the time I first heard that usage. It was back in 1967 and I was a young soldier in Ethiopia. I met an Ethiopian about my age named Hamid Mohamed and when I told him I was interested in computers, he said “I used to be one.” It took some explaining on his part for me to understand what he meant.

Tom Haige, author of several comments, above, as well as the book, Eniac in Action: Making and Remaking the Modern Computer (See reference [11] at the end of the main article.) wrote me a personal email in which he referenced the recent comment, above, from Daniel Giversen.

With Tom’s permission, I quote the relevant portions of the email:

“Who invented the computer” on YouTube:

From the Computer History Museum: https://www.youtube.com/watch?v=d1pvc9Zh7Tg

She says Babbage did:

https://www.youtube.com/watch?v=QTcyGmlzw_U